The New Paradigm of Visual Interaction in the Age of AI

Foreword

I have always wondered what form these "tools" we take for granted will take in the age of artificial intelligence.

Currently, the most common application of artificial intelligence is usually confined to chat interfaces, which typically interact using a text-based question-and-answer model. As technology continues to advance, Vercel has also introduced Generative UI, which can dynamically generate customized interactive user interfaces based on dialogue content and needs. What form will future UIs take? Will they evolve into what is shown in movies, featuring cool holographic displays and intuitive interactive HUDs, or might we usher in a new era that no longer relies on traditional GUIs?

Natural language becoming the fundamental interface for every software application might mean the end of GUIs

Musings

In the future, will I be able to simply say, "Siri, book me a flight," or perhaps, just like the brain-machine experiments conducted by Elon Musk, information can be transmitted with just a thought? The necessary information could be displayed directly in front of the eyeball (if so, could blind people see it?), without the need for external devices. Content needing interaction could be manipulated via fingers or eyeballs, similar to VR glasses. Perhaps the future will truly resemble Black Mirror.

Research

AI Pin

The Ai Pin launched by Humane is considered a pioneer. This product magnetically clips onto clothing. It has no screen and is controlled via voice and a small touch surface. It includes a laser that can project images onto your palm, and it also has a camera that recognizes gestures for switching operations and other tasks.

Its current and potential future supported functions include: laser projection display, gesture and voice interaction, AI assistant, real-time translation, health monitoring, music playback, message alerts, etc.

Rabbit

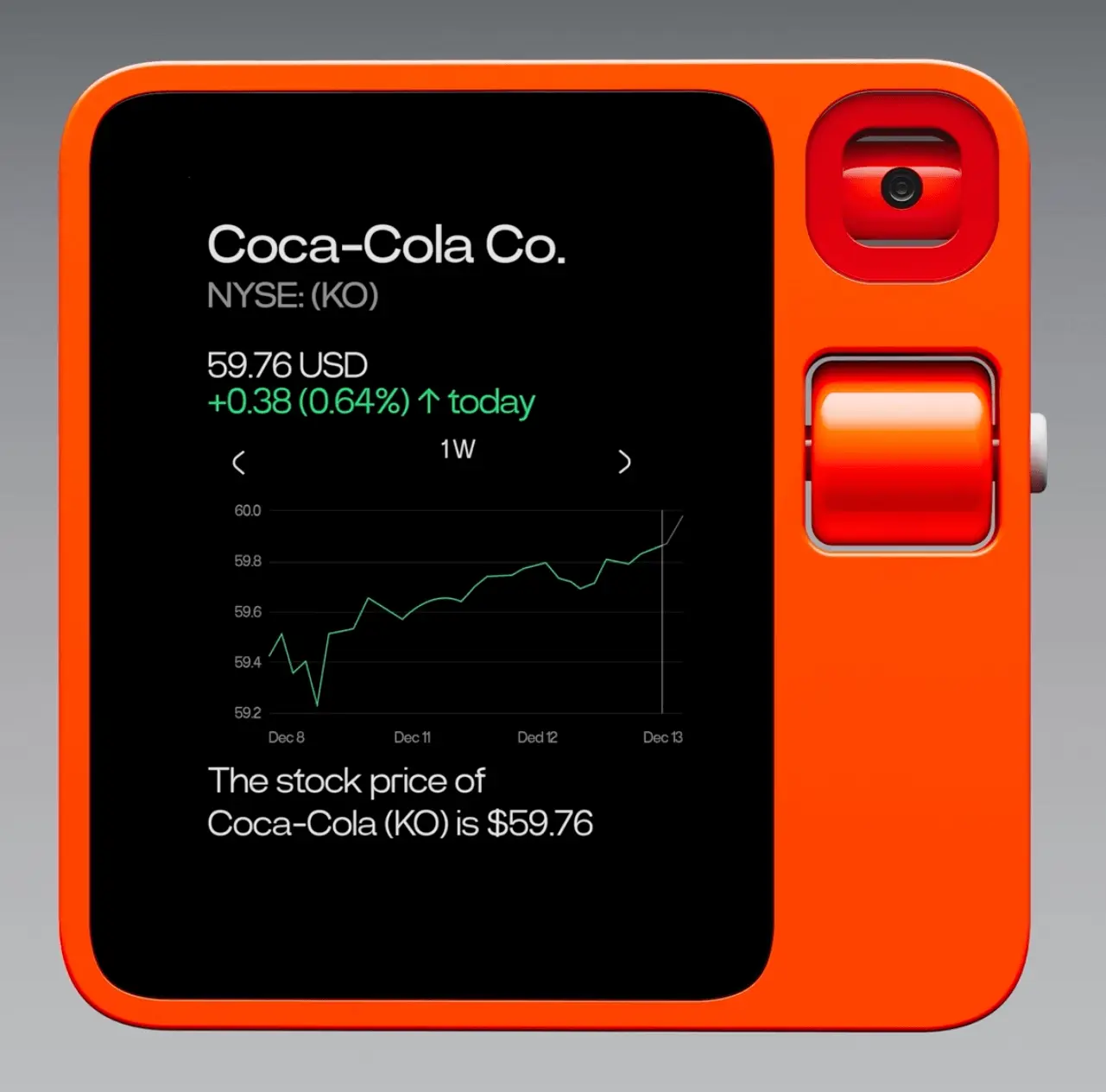

The Rabbit R1 is an AI device launched by Rabbit, positioned as your pocket companion. It mainly consists of a screen, camera, scroll wheel, and button.

Its current and potential future supported functions include: understanding and executing actions (hailing a taxi, ordering food, playing music), real-time translation, teaching mode, etc. You can watch the review video.

Dot

Dot is a chat application on iOS that allows users to send text, voice memos, images, and PDF files, and it can also search the web for you. Currently, Dot communicates via text and aims to be an ever-present companion. Unlike most AI conversations, Dot can remember what you've said before and rarely forgets anything.

Amazon Lex

Amazon Lex V2 is a service provided by AWS that allows developers to build conversational interfaces for applications, supporting both voice and text input. Users can interact using natural language, for example, placing orders. Furthermore, Lex V2 can be integrated with web elements (buttons, selection boxes, etc.) to solve user problems or help them complete specific tasks through Semi-Guided Conversations.

Summary

The examples provided may not be exhaustive, but they offer a simple view of the overall trend:

- The hardware carriers are changing. In addition to mobile phones and computers, people are also attempting to present content on more portable devices, such as necklaces, badges, and pagers.

- The ways visual images are presented are becoming more diverse, such as projection and laser displays. Due to size limitations, the primary forms of information presentation are text, simple charts, and button details.

- Natural language interaction will be used more widely.

My Thoughts

Generative UI is the Future Trend

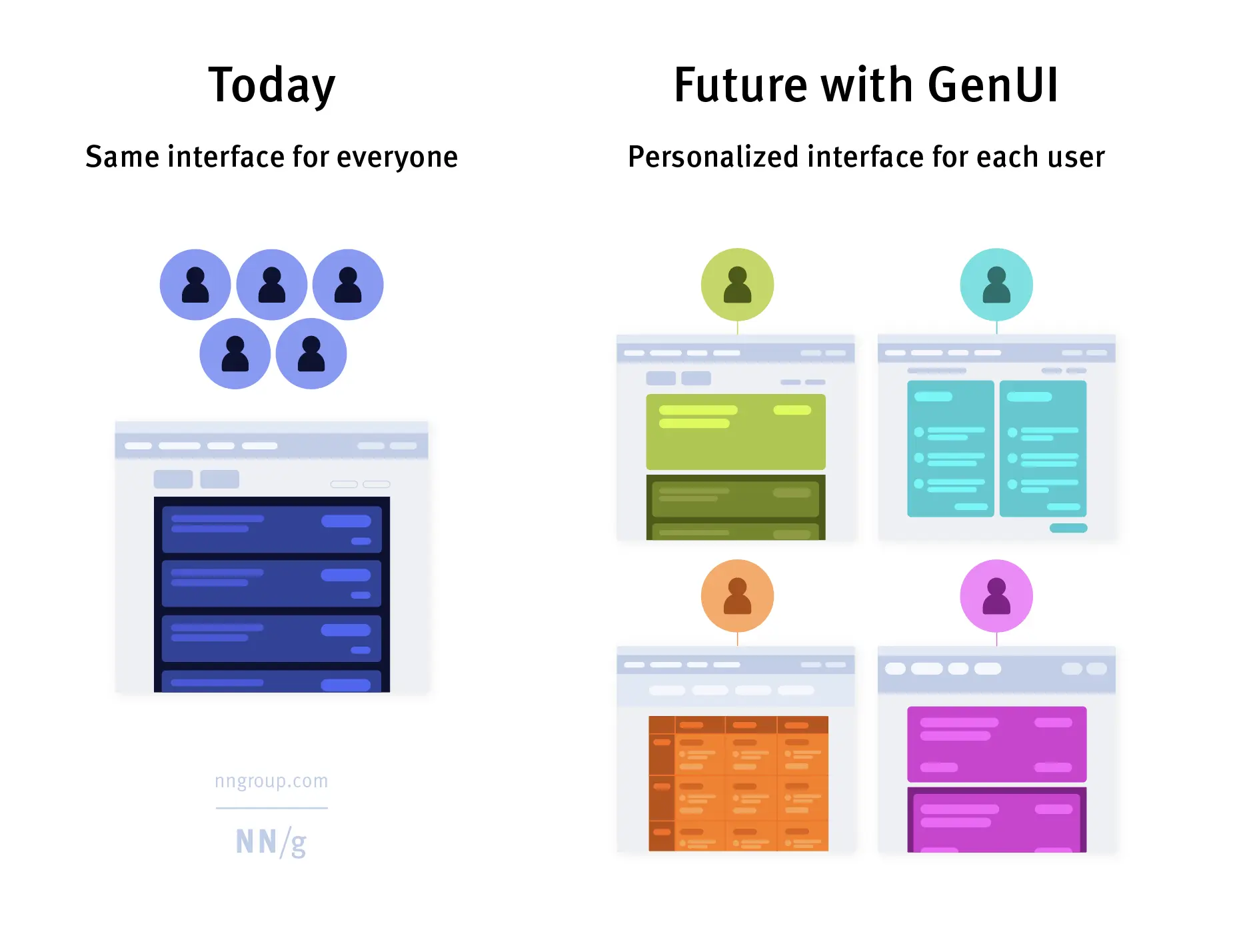

Currently, interface design must satisfy as many people as possible. Any experienced professional designer knows the main drawback of this approach—you can never satisfy everyone completely. Personalization and customization play a relatively minor role.

This application scenario is somewhat similar to Alipay's home screen, which uses dynamic personalized cards based on location (displaying airport-related information when at an airport).

Moreover, Generative UI is not limited to dynamic cards; it can dynamically display an entire system based on personal data. As exemplified in the article Generative UI and Outcome-Oriented Design, this is true "a thousand people, a thousand faces" (extreme personalization):

- Has dyslexia - the application displays special fonts and color contrast.

- User focusing on cost and time - explicitly displayed, automatically increasing the weighting of relevant flights.

- Although it is the same application, the interface, functions, etc., are all personally customized.

Designers will also shift from universal design to personalized design, setting boundaries for various personalized scenarios to strengthen constraints on artificial intelligence.

Traditional Interaction Will Not Be Replaced, But Will Be Greatly Simplified

Although natural language interaction has advantages, visual elements like buttons, icons, and gestures are still necessary in scenarios requiring fast and precise input; pure voice input may reduce efficiency. Furthermore, not all tasks can be completed effectively through voice conversation alone.

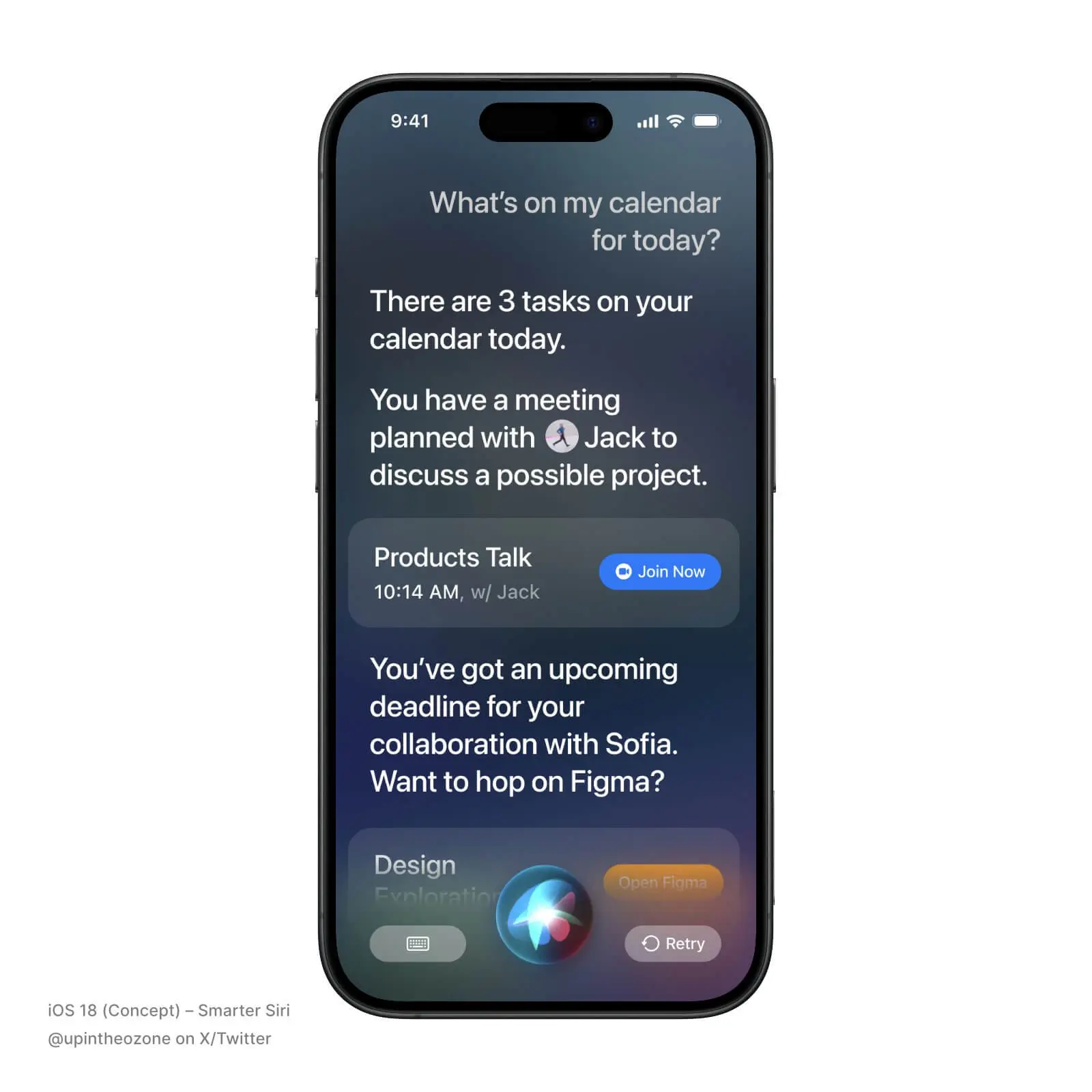

Different interaction methods are suitable for different situations. The coexistence of visual and voice input/output can maximize adherence to accessibility design principles, allowing people with disabilities to choose methods suitable for them. Once LLMs are capable of completing specific tasks, the interface will be greatly simplified.

Additionally, natural language interaction is not suitable for all scenarios; for example, in the office or library, it could cause issues such as privacy leaks.

Final Thoughts

Although I wanted to write as extensively as possible, I ended up returning to GUI itself, just pondering the future form of existing products...

References

- ScreenAI: A visual language model for UI and visually-situated language understanding

- The AI Device Revolution Isn’t Going to Kill the Smartphone

- Meet Dot, an AI companion designed by an Apple alum, here to help you live your best life

- Malleable software in the age of LLMs

- Generative UI and Outcome-Oriented Design

- UFO: A UI-Focused Agent for Windows OS Interaction

- Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs