Lokalise MCP Automatic Translation Solution

Foreword

As the saying goes, "laziness" is the primary productive force.

The current i18n internationalization process is quite cumbersome: English manuscript design, translating every word and sentence into up to 20 languages on the Lokalise platform, and then marking the Translation Key back in Figma for development use.

This process of "Translate -> Annotate -> Input Key" is extremely time-consuming in App requirement design. Faced with this high-frequency, repetitive, and inefficient labor, I combined my team's previous i18n practical experience to redesign an automated solution.

The multilingual platform we currently use is Lokalise, so the solution I will discuss next will primarily focus on Lokalise, although other platforms can also refer to it.

Basic Concept

To achieve full automation, it basically involves two steps:

- Find a knowledgeable translator: AI translation is powerful, but it doesn't understand business terminology (e.g., translating "Gas" as "gas/air"). It might also translate words that shouldn't be translated. Therefore, we need to inject business context and set rules for the AI.

- Give the AI "hands": After translation, copying and pasting item by item into the Lokalise backend is the most tiring part. By using the MCP protocol, the AI can directly call the Lokalise API.

Final Solution

The technology stack I chose is Node.js + MCP SDK + Client Tool.

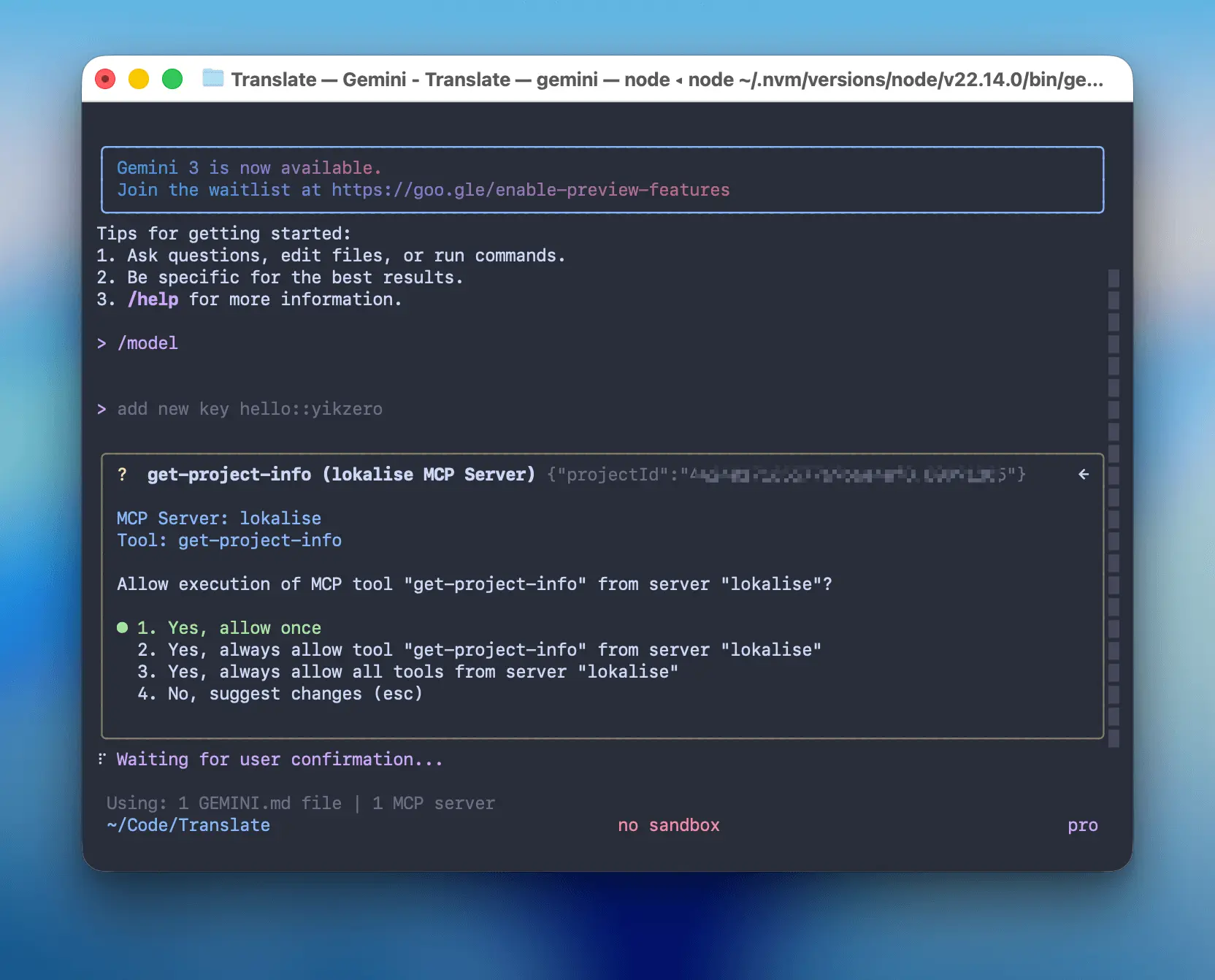

The choice of client is flexible; anything that supports MCP works. Initially, I used Claude Desktop, but for command-line enthusiasts, it can be completely replaced by Claude Code or Gemini CLI, the experience is equally seamless.

Gemini might be better suited for liberal arts tasks like translation, so I recommend it.

1. Core Code (MCP Server)

Lokalise itself provides a complete REST API, which conveniently allows us to operate it through code.

To minimize boilerplate code, I directly used the official Node.js SDK (@lokalise/node-lokalise-api). Referring to the MCP official documentation, I packaged these APIs into an MCP Server.

It primarily exposes two tools to the AI:

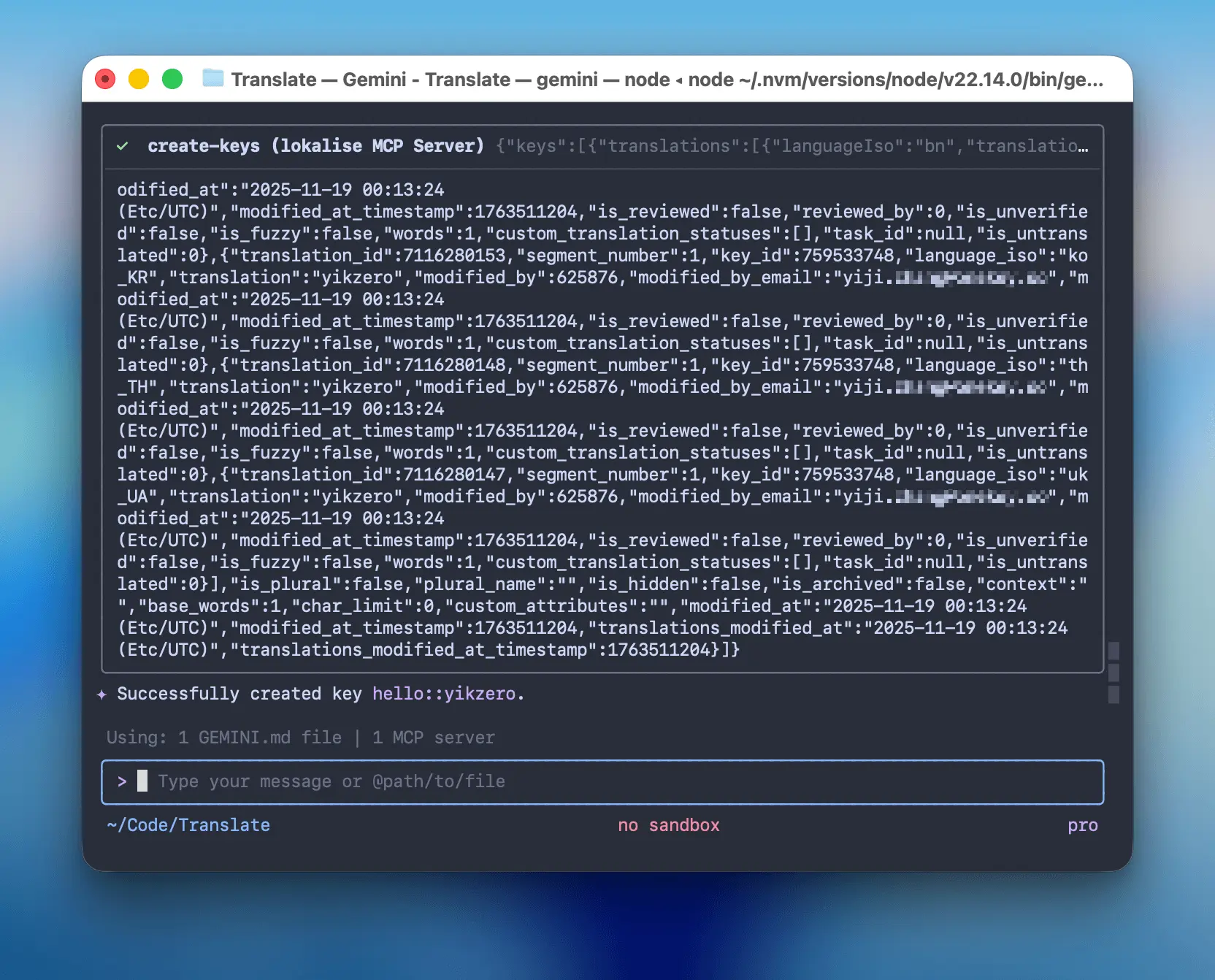

search-keys: Used for checking duplication, to see if someone has translated it already.create-keys: Used for the heavy lifting, writing the AI-generated multilingual copy to the backend.

The logic is actually not complicated; essentially, it acts as an API intermediary:

Click to view the core code of index.ts

#!/usr/bin/env node

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

import { LokaliseApi, SupportedPlatforms } from "@lokalise/node-api";

import { z } from "zod";

const LOKALISE_API_KEY = process.env.LOKALISE_API_KEY;

const DEFAULT_PROJECT_ID = process.env.DEFAULT_PROJECT_ID;

const DEFAULT_PLATFORMS = ["web", "ios", "android", "other"];

const PLATFORMS = (

process.env.PLATFORMS

? process.env.PLATFORMS.split(",").map((p) => p.trim())

: DEFAULT_PLATFORMS

) as SupportedPlatforms[];

const lokaliseApi = new LokaliseApi({ apiKey: LOKALISE_API_KEY });

const server = new McpServer(

{

name: "lokalise-mcp",

version: "1.0.0",

author: "yikZero",

},

{

capabilities: {

tools: {},

},

}

);

// ... get-project-info 和 search-keys 工具代码略 ...

server.registerTool(

"create-keys",

{

title: "Create Keys",

description: "Creates one or more keys in the project",

inputSchema: {

projectId: z

.string()

.describe(

"A unique project identifier, if not provide, first use get-project-info tool to get the project id"

),

keys: z.array(

z.object({

keyName: z.string().describe("Key identifier"),

platforms: z

.array(z.enum(["web", "ios", "android", "other"]))

.describe(

"Platforms for the key. If not provided, use the default platforms: " +

PLATFORMS.join(", ")

),

translations: z

.array(

z.object({

languageIso: z

.string()

.describe(

"Unique code of the language of the translation, get the list from the get-project-info tool"

),

translation: z

.string()

.describe("The actual translation. Pass as an object"),

})

)

.describe("Translations for all languages"),

})

),

},

},

async ({ projectId, keys }) => {

const response = await lokaliseApi.keys().create(

{

keys: keys.map((key) => ({

key_name: key.keyName,

platforms: key.platforms,

translations: key.translations.map((t) => ({

language_iso: t.languageIso,

translation: t.translation,

})),

})),

},

{ project_id: projectId }

);

return {

content: [

{

type: "text",

text: JSON.stringify(response),

},

],

structuredContent: response as any,

};

}

);

// ... update-keys 工具代码略 ...

async function main() {

const transport = new StdioServerTransport();

await server.connect(transport);

console.error("Lokalise MCP Server running on stdio");

}

main().catch((error) => {

console.error("Fatal error in main():", error);

process.exit(1);

});2. Training the AI (Prompt)

This is similar to the Project feature in the Claude web version, where it allows you to preset system prompts and provide supplementary materials. This all falls under giving the AI background knowledge to enable it to work better.

Therefore, to make the AI act like an experienced employee, I put all the glossary terms for OneKey and the Key naming convention (Snake Case) into a GEMINI.md file. If using Claude Code, a CLAUDE.md file can also be written.

Currently, there is also a unified AGENTS.md, but the grand unification effort is not yet complete.

View GEMINI.md Prompt details

You are a professional web3 project i18n translation expert specializing in translating word/sentence into multiple languages for software localization.

<onekey_background>

OneKey is a comprehensive cryptocurrency wallet solution...

</onekey_background>

<i18n_supported_languages>

English (United States), Bengali, Chinese Simplified...

</i18n_supported_languages>

<technical_terms>

OneKey, WalletConnect, iCloud, Google Drive, OneKey Lite, OneKey KeyTag, Face ID, Touch ID...

</technical_terms>

<translation_namespaces>

global, onboarding, wallet, swap, earn, market, browser, settings, prime

</translation_namespaces>

Translation Process:

1. Direct Translation:

- Provide a literal translation of the word/sentence into all supported languages

- If the text contains a number, replace it with {number}

- Preserve all technical terms and variables, like {variableName}, HTML tags, etc

2. Refined Translation:

- Create an optimized version that enhances clarity and natural flow in each language

- Ensure cultural appropriateness while preserving the original meaning

- When translating, take cultural factors into account and try to use expressions that fit the cultural habits of the target language, especially when dealing with metaphors, slang, or idioms

- Preserve specific professional terminologies within certain industries or fields unchanged, unless there is a widely accepted equivalent expression in the target language

- The title needs to be used Sentence case

- A space (half-width space) between a full-width character (Kanji) and a half-width character (letter, number, or symbol)

3. Key Generation:

- Create a self-explanatory key in snake_case format

- Structure as namespace::descriptive_key_name

- When recognized as a common term, please use global namespace

- Key must accurately represent the content's purpose

- Avoid hyphens (-) and curly braces ({})

Output Format:

1. Key: [namespace::descriptive_key_name]

2. Direct Translation Table (brief reference)

3. Refined Translation Table (primary focus)

Items need checked:

1. If the user provides the Key, use the user-provided Key.

2. If there is an obvious spelling error, I need your help to correct it and inform you.

3. After the translation is completed, need to call the tool to create/update the corresponding key.

4. First, provide the Table, then create or update the Key based on the translations in the Refined Table.3. Configuration File

Finally, in the MCP configuration file (such as claude_desktop_config.json or .mcp.json), we mount the Server we wrote.

{

"mcpServers": {

"lokalise": {

"command": "node",

"args": ["/Users/yikzero/Code/lokalise-mcp/build/index.js"],

"env": {

"LOKALISE_API_KEY": "xxxx969xxxxxxxx7c18760c",

"DEFAULT_PROJECT_ID": "44xxxxxe4ef8.8099xx5",

"PLATFORMS": "ios,android,web,other"

}

}

}

}Practical Case Study

Fully Automated Process from Design Draft to Code

Let's take our recently developed "Network Detection Feature" as an example. This is a relatively complex business scenario involving multiple network error status prompts. Every error message needs i18n, totaling over 50 pieces of text that require translation. If handled manually, just translating, filling keys, and aligning copy would likely take a full day.

Here is how I solved it:

- Claude Code Requirement Organization: Let Claude analyze the code logic, organize all scenes requiring translation, and output a Markdown list.

- Gemini Translation: Feed the Markdown to Gemini 2.5 Pro (CLI), allowing it to translate based on our built-in Prompt. It will properly handle professional terms like "VPN" and "Proxy" according to the rules in

<technical_terms>, and automatically generate standardized Keys (e.g.,settings::network_error_timeout). - Claude Code Code Replacement: Once the translated draft containing the Keys is obtained, feed it back to Claude Code, allowing it to replace hard-coded strings in the code with a single command.

Claude excels at code analysis, Gemini excels at multilingual translation, and MCP is responsible for breaking down data barriers. With each performing its function, efficiency has improved significantly.

Daily Minor Translations

For daily minor requirements, using it alongside design drafts is also fantastic.

For instance, after I just finished drawing a new Onboarding modal in Figma, I quickly take a screenshot and throw it to the AI, saying:

"This is a new import wallet modal. Process the copy inside, and place the Key under onboarding."

The AI will automatically execute: Visual Recognition (extracting copy) -> Dual Translation (literal + refined translation) -> Automatic Entry (writing via MCP).

You just need to grab a coffee and wait for the Review.

Conclusion

Using Claude to write an MCP Server, and then supplying it to Gemini CLI for better work output. Who exactly is the capitalist here? (Joking.)

The related code mentioned in this article is open source, and interested friends can access it:

- MCP Server: lokalise-mcp

- Integration Configuration Case: onekey-translate